In a previous post, I introduced a suite of Javascript tools that can be used to reformat PGN files and determine square utilization (traffic) and square occupancy (parking) for different pieces. Since that post, I have rewritten the code and improved the functionality of each of these tools. I have used these tools to collect data on square utilization or occupancy, which I then process further in an excel file (which I will make available shortly) and generate heatmaps using Plotly. However, Plotly was cumbersome to use, having to reformat the heatmap for each data set.

Here, I am introducing another Javascript tool that can be used to take square utilization or occupancy data and generate customizable heatmaps specifically for chess. This can be found at the following address: http://djcamenares.x10.mx/chess/heatmap.shtml

In the rest of this post, I describe how to use the features on the heatmap program, as well as detailing other updates to the site. Select 'Read More' to view the rest of the article. Please feel free to share your comments regarding this tool, especially if you used it to generate interesting insights!

Customizable Chessboard Heatmap

The Chessboard Heatmap can represent tab-delimited data (enter into the Input field) as a heatmap the size of the chessboard. This data should be of the form of values for each chessboard square, starting with the square A8 and moving left to right. You should have 64 values in total. The results from the square utilization or occupancy tool are in the correct format for this analysis.

There are several ways you can modify the output, according to your taste.

Minimum / Maximum

A heatmap will display different parts of a grid (or other shape) with colors that correspond to the numbers inputted. The colors or shades are a scale defined by the maximum and minimum from the dataset. If you leave these input values as Automatic, the maximum and minimum from the dataset given will be automatically given and applied to the calculations. You can also specify your own max or min value, which could be useful if you are generating several heatmaps from multiple datasets and want a consistent scale.

Color Theme

This is a fairly straightforward option, where you get to pick a color theme for the heatmap or chessboard representation. "Mets", the default, ranges from Blue to Orange values. Each color theme has up to 20 shades available.

Square Settings

This setting has three options. The default, Scaled, Empty, will display a heatmap, with color shades scaled to the square values, but without any text listed in each square. Scaled, Text will also display a scaled colored heatmap, but with text values in each square. Another option below (Text Output) controls the exact nature of the text / values in shown in each square. Fixed, Text will have a fixed chess / checkerboard pattern (alternating light and dark squares) instead of a shaded heatmap, but will contain text values in each square.

Scale

This is a useful, but perhaps not obvious features, that supplements the use of custom maximums well. In short, this controls the range of value around the median that will be distinguished by different color categories.

For example, 50 (0-100) will have different shades representing values anywhere from 0 (the minimum) to 100 (the maximum). The next option, 25 (25-75), has a finer distinction between any value from 25 to 75% of the maximum value. Anything lower than 25 or higher than 75 is grouped together.

Another way to describe this feature is to imagine it as a zoom tool, allowing you to magnify differences around the middle or median point in the data.

Text Output

If you selected text output in the Square Settings feature, you can control just what type of text is returned here. The default, Original, returns the same values you input in the beginning. In the course of calculations for the heatmap, the program will normalize the data based upon the maximum and minimum values. You can have this normalization reported as an amount out of 100 (for example, the value 37 in this reporting means that the square has a value that is 37% of the maximum). You can also select the same normalization reported out of 1,000 (in keeping with the example above for a square that is 37% of maximum, this would report the value 370). The Normalization (1,000) feature reports values in a fashion similar to how baseball batting averages are reported.

Axis-Label

This simple feature controls whether the heatmap board will have labelled Ranks and/or Files.

Example Heatmaps

Still confused about the heatmap tool? Hopefully, the following examples will give you an idea of what you can do using this program. These are all generated using the square utilization and occupancy data from a database of Bobby Fischer's White games. I hope you enjoy this gallery, and get some ideas for your own data visualization.

For any of the below heatmaps, please click for a larger image.

This is a simple heatmap showing the utilization, or traffic, of any and all of White's pieces in Fischer's games as White. Orange is the positive end of the scale, representing the maximum values, and Blue is the minimum side of the scale. In this image, the maximum and minimum were automatically calculated from the data.

This same heatmap can be shown with the values for each square listed:

In this example, the numbers are normalized to 1,000. Thus the square d3, with a value 432, sees traffic that is only 43.2% that much of d4, the maximum value.

The same heatmap, again without numbers, can also be displayed with a different color schemes, this time ranging from dark orange (max) to white (min), in a fashion similar to that of analysis by Seth Kadish.

Data can also be shown on a normally-shaded chessboard.

This is from a slightly different dataset. Here, you can see again the values ranging from 0 to 1,000, but this time they are in the context of a normal chessboard. Note here that a different color scheme (green) has been used.

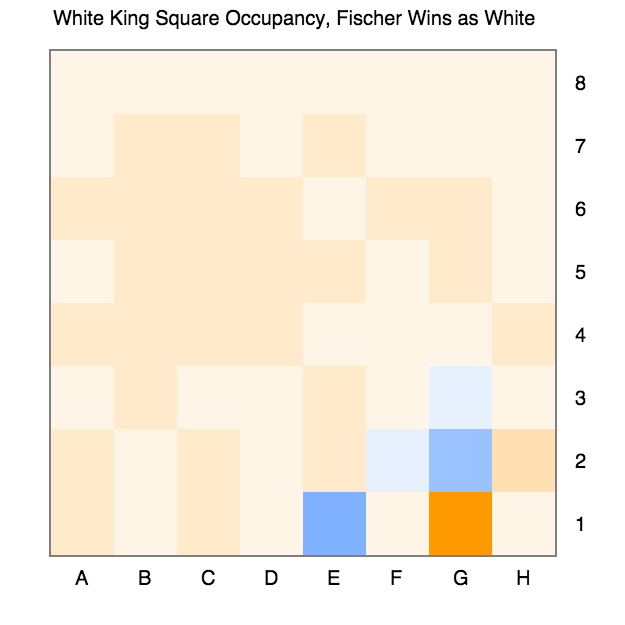

The next few examples highlight both user-specified maximum / minimum values as well as a changing scale. For now, I will only discuss these maps in terms of how they highlight these different features. In the final section below, I also discuss the nature of the data representing these charts.

The above heatmap is generated using the automatic calculation of minimum and maximum, and the default scale (50, 0-100). The minimum in this map is e1, and the maximum value is g1, with most values near the medium.

We can change the scale to see finer differences between squares with values close to the median

The above is the same data, with the same maximum and minimum but with the 10 scale utilized. In other words, anything that is 60% of the maximum or greater has the same shade, as does everything that is 40% of the max or below. The other 18 shades differentiate between values within these bounds, allowing smaller differences to be visualized. Note that the squares with the max and min are still the correct shade.

In the above dataset, the actual maximum and minimum are not that far from each other (41 and -35, respectively). However, we can set the maximum and minimum to the theoretical limits for this dataset, 1,000 and -1,000. If we do this, most of the values will be very close to the median, and very far from these huge maxs and mins.

The above heatmap is the same dataset as before, with the default scale (50, 0-100), but with the user-defined max and min of 1,000 and -1,000. With the maximum and minimum set at numbers so much larger than the differences actually in the data, it makes almost every square the same shade. We can still visualize the small differences, however, by changing the scale.

By changing the scale to 3 (47-53), we are now using most shades to differentiate only between 3% greater than the median and 3% smaller than the maximum. Even though we have changed the maximum and minimum, by also changing the scale, we can visualize the same trends as before: e1 is a low value, g1 is a high value.

Why would you want to bother changing the max and the scale if it shows the same trends? Automatically calculating the maximum can make it harder to compare different heatmaps. However, by making heatmaps with the same user-defined max and scale, you can directly compare the results from different heatmaps. This can be useful for reasons described below.

What if we want to add more information to this map? We can add some text, and can choose the type of output. Already featured was normalized data to 1,000.

The above heatmap is the same dataset, this time with the 1,000 maximum and -1,000 minimum, with the 7 scale applied (notice the differences are slightly less pronounced than with the 3 scale). Here, the original value for each square is displayed.

We can also display this same data in the square but normalized to maximum at 100.

Keep in mind, however, these values all seem close to 50% of maximum, because the maximum in this case is the astronomical value of 1,000 as defined by the user.

Additional Updates

For any readers that have already seen the previous post on PGN reformatting, square utilization and occupancy, I should note here I have updated the code for these three tools. The PGN reformatting tool is now used to convert PGN files to FEN and FEN-X formats. The other two tools now come with additional options, and have been streamlined for efficiency. For example, the square utilization tool now can deliver data on specific pieces, instead of just the total sum of piece movements.

Generating Comparisons, Differential Data and Maps (A Preview)

Reading all of the features and exploring the use of these different Javascript programs can be fun, but what is the point? Can they do anything useful?

I think that there are some interesting applications for these tools. For example, you can compare the square utilization or occupancy between two great champions. Perhaps even more interesting is to compare the data between a database where a certain player (playing White) always loses, and another when they always win. From this, you can generate a differential map, showing which squares or pieces placement / movements are found more often in wins versus losses.

In fact, this is what the last few maps above represent; higher values mean that square was featured more often in Fischer's wins, while lower values were more common in losses. This makes some sense; a securely castled King on g1 is a positive and helpful feature for White in most positions. By contrast, an uncastled King on e1 is featured in many (technically more often) positions that lead to Black victories (which in this database means a Fischer loss).

Generating differential data can be useful when looking at player's databases, or even looking at wins versus losses in particular opening lines. It is this latter application I am most interested in, and you can be sure to see many more posts in the future (or whenever I have the time during this busy semester) revolving around this topic.

No comments:

Post a Comment